One of the advantages of building something yourself is that if you’re not happy with it you can tweak, change, modify and adapt until you are. But one of the disadvantages is that sometimes you get so caught up in all the tweaking, changing and adapting that you overlook a much simpler solution.

So I had a harvester that could save the publication details and content of all the newspaper articles in a search on Trove. But the warm glow of self-satisfaction quickly began to fade as I started to think about how I wanted to use the content I was harvesting.

The harvester saved the text of articles organised in directories by newspaper title. This seemed to make sense. It meant that you could easily analyse and compare the content of different newspapers. But what if you wanted to examine changes over time? In that case it’d be much easier if the articles were organised by year — then I could just pull out the a folder from a particular year, feed it to VoyeurTools, and start tracking the trends.

There ensued some minor tinkering. As a result, you can now you can pass an additional option to the harvest script, telling it whether to save the article texts and pdfs in directories by year or newspaper. Simply set the ‘zip-directory-structure’ option in harvest.ini to either ‘title’ or ‘year’. If you’re using the command-line you can use the ‘-d’ flag to set your preference. Easy.

But that set me wondering whether it might be possible to generate an overview, showing the number of articles matching a search over time. So I started on a modification of my harvest script that did just that — cycling through the search results, adding up the numbers. It wasn’t until I ran the new script for the first time that I realised there was a much simpler alternative.

All I needed to do was repeat the search for each year in the search span and grab the total results value from the page. D’uh…

So instead of sending hundreds or perhaps thousands of requests to Trove, all I needed was one for each year. From there it was easy and soon I had my first graph.

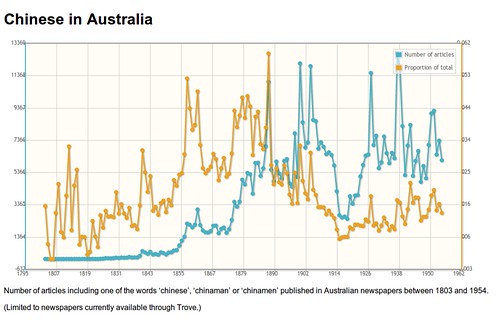

I was pretty pleased with that, but of course the raw numbers of articles on their own are rather misleading. The more interesting question was what proportion of the total number of articles for that year the search represents. Another quick tweak and I was grabbing the overall totals and calculating the proportions.

At this point I invited my Twitter followers to suggest some possible topics — you can see the results on Flickr.

But what do the peaks and troughs represent? I wanted to use the graphs as a way of exploring the content itself. This was possible as I’d saved the data as JSON and used jqPlot to create the graphs in an ordinary HTML page. Courtesy of some clever hooks in the backend of jqPlot I could capture the value of any point as it was clicked. That gave me the year, so all I had to do was combine this with the search keyword values and send off a request to Trove.

So now instead of just looking at the graphs, you could explore them.

Perhaps you’re wondering how I managed to pull the Trove results into the page? Just a bit of simple AJAX magic combined with my own unofficial Trove API. (More about that in the next exciting installment!)

I’ve created a little gallery of graphs to explore. I’m still open to suggestions!

The code for gathering the data is all on Bitbucket, so start building your own. Just run the ‘do_totals.py’ script in the bin directory from the command line. The script takes two flags:

- -q (–query) the url of your Trove search (compulsory)

- -f (–filename) the path and filename for your data file (don’t include an extension)

The script will create a javascript file containing two JSON objects, ‘totals’ and ‘ratios’. These can then be fed to jqPlot. View the source of one of my interactive graphs to see how.

Of course it would be really nice to create a web service where people could create, share, compare and combine their graphs — but that might have to await a generous benefactor…

This work is licensed under a Creative Commons Attribution 4.0 International License.

[…] won't release an official API is a bit mysterious.) He uses that to scrape Trove to do searches and display results which aren't possible with the interface offered by the NLA, such as plotting the frequency of […]

[…] want to do this yourself check out Wragge’s posts, Mining the Treasures of Trove (Part 1) and (Part 2). Firstly let’s look at Wragge’s graph of a topic that I have been writing about this […]

[…] want to do this yourself check out Wragge’s posts, Mining the Treasures of Trove (Part 1) and (Part 2). Firstly let’s look at Wragge’s graph of a topic that I have been writing about this […]

[…] post about analysing information held by Trove, this post explains how the tools created by Wragge can prove a boon for […]

[…] The first step was to identify and collect the documents that I wanted to analyse. I used Sherratt’s “harvester” to collect all newspaper articles containing the phrase “secular education”. The search I constructed in Trove returned over 7,800 articles. It would take a ridiculously long time to click on each article, download it and save it. This became a feasible exercise with Sherratt’s tool. After I had run it, the articles were all saved as text files and could be found in subdirectories according to the year they were published. The file names included the name of the newspaper, the date and page where the article was found. Wonderful! I encourage you to have a go using the harvester. It requires a bit of work but Tim Sherratt has given good instructions in his posts, Mining the Treasures of Trove (Part 1), and Part 2. […]

Hi Tim,

What you’re doing with TROVE is very similar to what we’d like to be doing. We are running a citizen science program called OzDocs, where people help extract climate histroy information from sources like TROVE. So sometimes we need to pull out bulk images or text to hand over to volunteers to sort through. Your Harvester looks good for that but I have a bit of a problem with one task that we are working on and was wondering if you had any ideas. We want to digitise all the pressure table published in the Argus in 1850s. However when we do a key word search we get less than 50% of what is really there. I guess that is because of OCR. Is there a way we could select dates or dates ranges and just pull the best quality image possible of page 2 of every edition within that range?

Thanks,

Josh

Josh,

There may well be simpler ways, but it seems to me you could script something by looking at what’s going on behind the scenes on the ‘Find an issue’ page. If you have a poke around you’ll see that there’s a very useful JSON call to http://trove.nla.gov.au/ndp/del/titlesOverDates/{year}/{month}. For example to find out all the issues from January 1901:

http://trove.nla.gov.au/ndp/del/titlesOverDates/1901/01

Once you have that, you can pull out all the issues of the title that you’re after using the approprate title id – ’13’ for the Argus. You’ll then have a list of issue numbers. Calling an issue number (eg http://trove.nla.gov.au/ndp/del/issue/26777) seems to return the first page of that issue. I suppose you could assume that the second page’s id will simply be the first page’s id + 1, but I don’t know how safe that is. A safer approach would simply be to grab the id for the page you want from the select page drop down. Once you have the id for the page you want it’s an easy matter to download the pdf using a url of the form: http://trove.nla.gov.au/ndp/imageservice/nla.news-page{page id}/print

All of this would be easily scriptable. You’d just need to feed your script the years to harvest and it would grab all the relevant pdfs for you.

Hope this helps.

Cheers, Tim

[…] already had a script that would generate a basic time series from a Trove query string. It simply takes the query, fires […]

[…] QueryPic (I got a bit sick of ‘search summariser’ and ‘graph-maker thing’). The first version just harvested data and left all the graph-making to you. But QueryPic does it all! It harvests the […]

[…] 18 months ago I created a little Python script to visualise search results in Trove’s collection of digitised newspapers. After a bit more […]